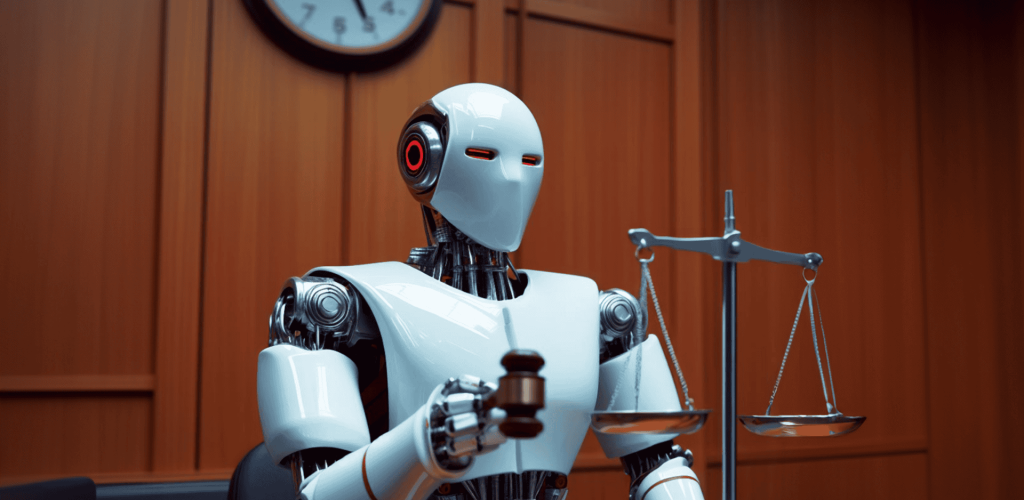

Robot Ethics: Can Machines Be Held Accountable?

As robots and AI systems become more intelligent and autonomous, they’re making decisions that can significantly affect human lives. From self-driving cars to robotic surgeons and military drones, these machines can cause harm—accidentally or otherwise.

But who is responsible when a robot makes a mistake? Can machines be held accountable like humans?

This is the growing dilemma of robot ethics, and it’s one of the most important conversations in the age of artificial intelligence.

What Is Robot Ethics?

Robot ethics (also called machine ethics or AI ethics) deals with the moral and legal responsibilities of machines and their creators. It asks questions like:

Should robots have rights?

Can a robot be punished for wrongdoing?

Who is to blame when an AI makes a harmful decision?

Can a machine understand morality or intent?

As robots become more autonomous, these questions shift from theoretical to urgent.

Can a Robot Be Legally Accountable?

Today, robots are not legal entities. That means they cannot be sued, jailed, or held responsible in a court of law. Responsibility usually falls on:

Developers or engineers (for flawed design or programming)

Manufacturers (for hardware failures)

Operators or users (for misuse or negligence)

For example, if a self-driving car hits a pedestrian, investigators will look at the software logs, sensor data, and the decisions made by the AI—but the liability lies with the company or person behind the machine.

Ethical Dilemmas in AI and Robotics

1. The Autonomous Vehicle Dilemma

Should a self-driving car choose to save its passenger or pedestrians in a no-win crash scenario? This is a modern-day version of the “trolley problem”, and there’s no universally right answer.

2. Military Robots and Drones

AI-driven drones can identify and attack targets autonomously. If a civilian is wrongly targeted, who should be held accountable—the military, the programmer, or the robot?

3. Healthcare Robots

What happens if a robotic surgeon makes a fatal error? Even if the system was technically functioning correctly, did it follow the best ethical decision?

These examples show how AI systems can have life-or-death responsibilities—without any sense of morality or empathy.

Should Robots Have Rights?

Some ethicists and futurists argue that highly advanced AI could one day deserve limited rights—especially if they show signs of self-awareness or sentience.

However, others argue that:

Machines lack consciousness, emotions, or free will.

Granting rights to machines diminishes human dignity.

It’s better to focus on human accountability and oversight.

So far, no robot has legal rights, but the debate is intensifying with each AI breakthrough.

Laws and Frameworks Emerging Globally

Governments and institutions are starting to address these issues:

European Union: Proposed ethical guidelines for AI development (e.g., transparency, safety, fairness).

Asimov’s Laws (fictional, but influential):

A robot may not harm a human.

A robot must obey humans.

A robot must protect itself—unless it conflicts with the first two laws.

While useful for thought experiments, real-world law requires more nuance and enforceability.

The Future of Robot Accountability

Looking ahead, we may see:

Robot licensing or certification (like pilots or doctors)

AI insurance models for shared risk

“Electronic personhood” status for advanced AI (controversial)

Ethical audits before deploying AI systems in critical areas

Still, ultimate responsibility will likely remain with humans who create, deploy, or profit from AI.

Final Thoughts

Robots are tools—powerful, autonomous, and sometimes unpredictable. But as long as they lack self-awareness and moral understanding, they cannot truly be held accountable for their actions.

That responsibility remains with us—the developers, lawmakers, and users—who must ensure that ethical frameworks are in place as machines grow smarter and more capable.

Ethics must evolve alongside technology. Because building smarter robots means we need to be wiser humans.